Peter Lobner

The 28 September 2016 article by Roger Parloff, entitled, “Why Deep Learning is Suddenly Changing Your Life,” is well worth reading to get a general overview of the practical implications of this subset of artificial intelligence (AI) and machine learning. You’ll find this article on the Fortune website at the following link:

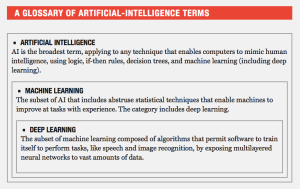

Here, the relationship between AI, machine learning and deep learning are put in perspective as shown in the following table.

This article also includes a helpful timeline to illustrate the long history of technical development, from 1958 to today, that have led to the modern technology of deep learning.

Another overview article worth your time is by Robert D. Hof, entitled, “Deep Learning –

With massive amounts of computational power, machines can now recognize objects and translate speech in real time. Artificial intelligence is finally getting smart.” This article is in the MIT Technology Review, which you will find at the following link:

https://www.technologyreview.com/s/513696/deep-learning/

As noted in both articles, we’re seeing the benefits of deep learning technology in the remarkable improvements in image and speech recognition systems that are being incorporated into modern consumer devices and vehicles, and less visibly, in military systems. For example, see my 31 January 2016 post, “Rise of the Babel Fish,” for a look at two competing real-time machine translation systems: Google Translate and ImTranslator.

The rise of deep learning has depended on two key technologies:

Deep neural nets: These are layers of neural nets that progressively build up the complexity needed for real-time image and speech recognition. Robert D. Hoff explains: “The first layer learns primitive features, like an edge in an image or the tiniest unit of speech sound. It does this by finding combinations of digitized pixels or sound waves that occur more often than they should by chance. Once that layer accurately recognizes those features, they’re fed to the next layer, which trains itself to recognize more complex features, like a corner or a combination of speech sounds. The process is repeated in successive layers until the system can reliably recognize phonemes or objects…… Because the multiple layers of neurons allow for more precise training on the many variants of a sound, the system can recognize scraps of sound more reliably, especially in noisy environments….”

Big data: Roger Parloff reported: “Although the Internet was awash in it (data), most data—especially when it came to images—wasn’t labeled, and that’s what you needed to train neural nets. That’s where Fei-Fei Li, a Stanford AI professor, stepped in. ‘Our vision was that big data would change the way machine learning works,’ she explains in an interview. ‘Data drives learning.’

In 2007 she launched ImageNet, assembling a free database of more than 14 million labeled images. It went live in 2009, and the next year she set up an annual contest to incentivize and publish computer-vision breakthroughs.

In October 2012, when two of Hinton’s students won that competition, it became clear to all that deep learning had arrived.”

The combination of these technologies has resulted in very rapid improvements in image and speech recognition capabilities and performance and their employment in marketable products and services. Typically the latest capabilities and performance appear at the top of a market and then rapidly proliferate down into the lower price end of the market.

For example, Tesla cars include a camera system capable of identifying lane markings, obstructions, animals and much more, including reading signs, detecting traffic lights, and determining road composition. On a recent trip in Europe, I had a much more modest Ford Fusion with several of these image recognition and associated alerting capabilities. You can see a Wall Street Journal video on how Volvo is incorporating kangaroo detection and alerting into their latest models for the Australian market

I believe the first Teslas in Australia incorrectly identified kangaroos as dogs. Within days, the Australian Teslas were updated remotely with the capability to correctly identify kangaroos.

Regarding the future, Robert D. Hof noted: “Extending deep learning into applications beyond speech and image recognition will require more conceptual and software breakthroughs, not to mention many more advances in processing power. And we probably won’t see machines we all agree can think for themselves for years, perhaps decades—if ever. But for now, says Peter Lee, head of Microsoft Research USA, ‘deep learning has reignited some of the grand challenges in artificial intelligence.’”

Actually, I think there’s more to the story of what potentially is beyond the demonstrated capabilities of deep learning in the areas of speech and image recognition. If you’ve read Douglas Adams “The Hitchhiker’s Guide to the Galaxy,” you already have had a glimpse of that future, in which the great computer, Deep Thought, was asked for “the answer to the ultimate question of life, the universe and everything.” Surely, this would be the ultimate test of deep learning.

Asking the ultimate question to the great computer Deep Thought. Source: BBC / The Hitchhiker’s Guide to the Galaxy

Asking the ultimate question to the great computer Deep Thought. Source: BBC / The Hitchhiker’s Guide to the Galaxy

In case you’ve forgotten the answer, either of the following two videos will refresh your memory.

From the original 1981 BBC TV serial (12:24 min):

https://www.youtube.com/watch?v=cjEdxO91RWQ

From the 2005 movie (2:42 min):