Peter Lobner

In 1975, I was shooting photographs with a Nikon F2 single lens reflex (SLR) film camera I bought two years before. The F2 was introduced by Nikon in September 1971, and was still Nikon’s top-of-the-line SLR when I bought it in 1973. I shot slide film because I liked the quality of the large projected images. I was quite happy with my Kodak Carousel slide projector and circular slide trays, even though the trays took up a lot of storage space. Getting print copies of slides for family and friends took time and money, but I was used to that. Little did I suspect, at the time, that a revolution was brewing at Eastman Kodak.

The first digital camera prototype: 1975

In 1975, Steve Sasson invented the digital camera while working at Kodak. This first digital camera weighed 8 pounds (3.6kg), was capable of taking 0.01 megapixel (10,000 pixel) black & white photos, and storing 30 photos on a removable digital magnetic tape cassette. An image captured by the camera’s 100 x 100 pixel Fairchild CCD (charge coupled device) sensor was stored in RAM (random access memory) in about 50 milliseconds (ms). Then it took 23 seconds to record one image to the digital cassette tape. For the first time, photos were captured on portable digital media, which made it easy to rapidly move the image files into other digital systems.

Steve Sasson & the first digital camera. Source: MagaPixel

Steve Sasson & the first digital camera. Source: MagaPixel

David Friedman, who has interviewed many contemporary inventors, interviewed Steve Sasson in 2011. I think you’ll enjoy his short video interview, which reveals details of how the first digital camera was designed and built, at the following link:

https://vimeo.com/22180298

Arrival of consumer digital cameras: 1994

In February 1994, almost 20 years after Steve Sasson’s first digital camera, Apple introduced the Kodak-manufactured QuickTake 100, which was the first mass market color consumer digital camera available for under $1,000.

Apple QuickTake 100. Source: Apple

Apple QuickTake 100. Source: Apple

The QuickTake 100 could take digital photos at either 0.3 megapixels (high-resolution) or 0.08 megapixels (standard-resolution), and store the image files on a internal (not removable) 1MB flash EPROM (erasable programmable read-only memory). The EPROM could store 32 standard or eight high-resolution images, or a combination. Once downloaded, these modest-resolution images were adequate for many applications requiring small images, such as pasting a photo into an electronic document.

In the following years before the millennium, the consumer and professional photography markets were flooded with a vast array of rapidly improving digital cameras and much lower prices for entry-level models. While my old Nikon F2 film camera remained a top-of-the-line camera for many years back in the 1970s, many newly introduced digital cameras were obsolete by the time they were available in the marketplace.

For a comprehensive overview of the evolution of digital photography, I refer you to Roger L. Carter’s DigiCamHistory website, which contains an extensive history of film and digital photography from the 1880s thru 1999.

http://www.digicamhistory.com/Index.html

Film cameras are dead – well almost. On 22 June 2009, Kodak announced that it would cease selling Kodachrome film by the end of 2009. Except for continuing production of professional film for movies, Kodak exited the film business after 74 years. FujiFilm and several other manufacturers continue to offer a range of print and slide film. You can read an assessment of the current state of the film photography industry at the following link:

http://www.thephoblographer.com/2015/04/23/manufacturers-talk-state-film-photography-industry/#.V73QqWWVtbc

Arrival of camera phones: 2000

In the new millennium, we were introduced to a novel new type of camera, the camera phone, which was first introduced in Japan in 2000. There seems to be some disagreement as to which was the first camera phone. The leading contenders are:

- Samsung SCH-V200, which could take 0.35 megapixel photos and store them on an internal memory device

- Sharp (J-Phone) J-SH04, which could take 0.11 megapixel photos and send them electronically

At that time, small point-and-shoot digital cameras typically were taking much better photos in the 0.78 – 1.92 megapixel range (1024 x 768 pixels to 1600 x 1200 pixels), with high-end digital SLRs taking 10 megapixel photos (3888 x 2592 pixels).

In November 2002, Sprint became the first service provider in the U.S. to offer a camera phone, the Sanyo SCP-5300, which could take 0.3 megapixel (640 x 480 pixels) photos and included many features found on dedicated digital cameras.

Sanyo SCP-5300. Source: Sprint

In late 2003, the Ericsson Z1010 mobile phone introduced the front-facing camera, which enabled the user to conveniently take a “selfie” photo or video while previewing the image on the phone’s video display. Narcissists around the world rejoiced! A decade later they rejoiced again following the invention of the now ubiquitous, and annoying “selfie stick”.

Ericsson Z1010. Source: www.GSMArena.com

You’ll find more details on the history of the camera phone at the following link:

http://www.digitaltrends.com/mobile/camera-phone-history/

Arrival of smartphones:

The 1993 IBM Simon is generally considered to be the first “smart phone.” It could function as a phone, pager, and PDA (personal desktop assistant) , with simple applications for calendar, calculator, and address book, but no built-in camera. The important feature of the smart phone was its ability to run various applications to expand its functionality.

The first mobile phone actually referred to as a “smartphone” was Erikkson’s 1997 model GS88 concept phone, which led in 2000 to the Erikkson model R380. This was the first mobile phone marketed as a smartphone…..but it had no camera.

With the introduction of camera phones and smartphones in 2000, and front-facing cameras in 2003, it wasn’t long before the most popular mobile phones were smartphones with two cameras. Now, just 13 years after this convergence of technology, it seems that smartphones are everywhere and these devices have evolved into very capable tools for high-resolution still and video photography as well as photo processing and video editing using specialized applications that can be installed by the user.

With these capabilities available in a small, integrated mobile device, it’s no wonder that the sale of dedicated digital cameras has been declining rapidly.

Impact of mobile phone cameras on dedicated camera sales

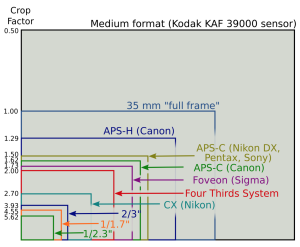

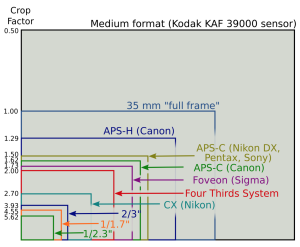

Here is a comparison of the digital image sensors on three representative modern cameras:

- Nikon D800 DSLR camera: 36 megapixels (7360 × 4912 pixels), FX full-frame (35.9 x 24.0 mm, 43.18 mm diagonal) CMOS image sensor

- Sony DSC-HX90V compact point-and-shoot camera: 18.2 megapixels (4896 x 3672 pixels), 1/2.3 type (6.17mm x 4.55mm, 7.67 mm diagonal) CMOS image sensor

- Apple iPhone 6 cameras: Main camera: 8 megapixels (3264 x 2448 pixels), 1/2.94 type (4.8mm x 3.6mm, 6.12 mm diagonal) CMOS, Sony Exmor RS image sensor. Front-facing camera: 1.2 megapixels

The Nikon’s FX sensor is as big as a the photo’s image would be in a 35 mm film camera. This is called a “full frame” sensor. Most digital cameras have smaller image sensors, as shown in the following comparison chart.

Source: www.techhive.com

Source: www.techhive.com

The IPhone 6 image sensor is smaller than any shown in the above chart. Nonetheless, its photo and video quality can be quite good.

For more information on digital camera image sensors, check out the 2013 article by Jackie Dove, “Demystifying digital camera sensors once and for all,” at the following link:

http://www.techhive.com/article/2052159/demystifying-digital-camera-sensors-once-and-for-all.html

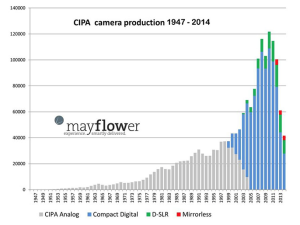

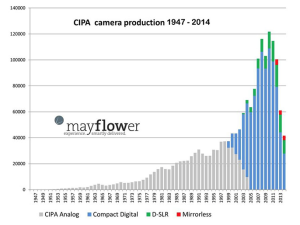

The rapid rise in the quality of mobile phone cameras is making small digital cameras redundant, and is having a dramatic impact on the sale of dedicated cameras, as shown in the following chart.

Source: Mayflower Concepts, petapixel.com

The above chart indicates that only 40,000 dedicated cameras of all types were sold in 2014; far below the peak of about 120,000 units in 2010. The biggest impact has been on compact digital cameras, with the DSLR cameras holding their own, at least for the moment.

While I still like my current Nikon DSLR, I have to admit that I’ve found some higher-end compact digital cameras that have most of the capabilities I want in an SLR but in a much smaller package. While I won’t make my mobile phone camera my primary camera, I may retire the DSLR.

Immediate communications and privacy

The rapid rise of the smartphone was enabled by the deployment of 3G and 4G cellular phone service. See my 20 March 2016 post on the evolution of cellular service for details on the deployment timeline.

With access to capable wireless communications networks and a host of photo and video applications and services, the cameras on mobile phones became tools for capturing images or videos of anything and instantly communicating these via the Internet to audiences that can span the globe. We’re now living in a world where many awkward moments get recorded, meals get photographed before they’re eaten, and there’s a need to post a selfie during an event to prove that you actually were there (and of course, to impress your friends). Thanks to the advent of the cloud, all of these digital photographic memories can be preserved online forever, or at least until you don’t want to continue paying for cloud storage.

Privacy is becoming a thing of the past. What happens in Vegas probably gets photographed by someone and, if you’re lucky, stays in the cloud…..until it’s needed, or hacked.

I don’t think Steve Sasson imagined such a future when he invented the first digital camera in 1975.