Peter Lobner

On 19 September 2016, California Governor Jerry Brown signed into law Senate Bill No. 1383 that requires the state to cut methane (CH4) emissions by 40% from 2013 levels by 2030. Now before I say anything about this bill and the associated technology for bovine methane control, you have an opportunity to read the full text of SB 1383 at the following link:

https://leginfo.legislature.ca.gov/faces/billNavClient.xhtml?bill_id=201520160SB1383

You’ll also find a May 2016 overview and analysis here:

https://www.ceres.org/files/water/sb-1383-slcp-summary/at_download/file

The problem statement from the cow’s perspective:

Cows are ruminants with a digestive system that includes a few digestive organs not found in the simpler monogastric digestive systems of humans and many other animals. Other ruminant species include sheep, goat, elk, deer, moose, buffalo, bison, giraffes and camels. Other monogastric species include apes, chimpanzees, horses, pigs, chickens and rhinos.

As explained by the BC Agriculture in the Classroom Foundation:

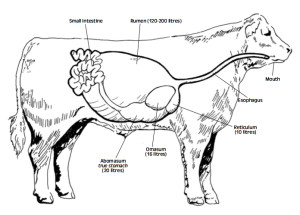

“Instead of one compartment to the stomach they (ruminants) have four. Of the four compartments the rumen is the largest section and the main digestive center. The rumen is filled with billions of tiny microorganisms that are able to break down (through a process called enteric fermentation) grass and other coarse vegetation that animals with one stomach (including humans, chickens and pigs) cannot digest.

Ruminant animals do not completely chew the grass or vegetation they eat. The partially chewed grass goes into the large rumen where it is stored and broken down into balls of “cud”. When the animal has eaten its fill it will rest and “chew its cud”. The cud is then swallowed once again where it will pass into the next three compartments—the reticulum, the omasum and the true stomach, the abomasum.”

Source: BC Agriculture in the Classroom Foundation

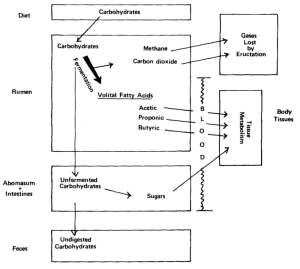

Generation of methane and carbon dioxide in ruminants results from their digestion of carbohydrates in the rumen (their largest digestive organ) as shown in the following process diagram. Cows don’t generate methane from metabolizing proteins or fats.

Source: Texas Agricultural Extension Service

You’ll find the similar process diagrams for protein and fat digestion at the following link:

Argentina’s National Institute for Agricultural Technology (INTA) has conducted research into methane emissions from cows and determined that a cow produces about 300 liters of gas per day. At standard temperature and pressure (STP) conditions, that exceeds the volume of a typical cow’s rumen (120 – 200 liters), so frequent bovine farting probably is necessary for the comfort and safety of the cow.

The problem statement from the greenhouse gas perspective:

The U.S. Environmental Protection Agency (EPA) reported U.S. greenhouse gas emissions for the period from 1990 to 2014 in document EPA 430-R-16-002, which you can download at the following link:

https://www3.epa.gov/climatechange/Downloads/ghgemissions/US-GHG-Inventory-2016-Main-Text.pdf

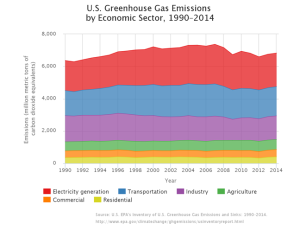

Greenhouse gas emissions by economic sector are shown in the following EPA chart.

For the period from 1990 to 2014, total emissions from the agricultural sector, in terms of CO2 equivalents, have been relatively constant.

Regarding methane contributions to greenhouse gas, the EPA stated:

“Methane is emitted during the production and transport of coal, natural gas, and oil. Methane emissions also result from livestock and other agricultural practices and by the decay of organic waste in municipal solid waste landfills.

Also, when animals’ manure is stored or managed in lagoons or holding tanks, CH4 is produced. Because humans raise these animals for food, the emissions are considered human-related. Globally, the Agriculture sector is the primary source of CH4 emissions.”

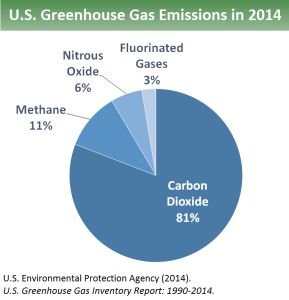

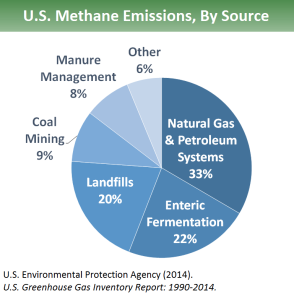

The components of U.S. 2014 greenhouse gas emissions and a breakdown of methane sources are shown in the following two EPA charts.

In 2014, methane made up 11% of total U.S. greenhouse gas emissions. Enteric fermentation is the process that generates methane in the rumen of cows and other ruminants, which collectively contribute 2.42% to total U.S. greenhouse gas emissions. Manure management from all sorts of farm animals collectively contributes another 0.88% to total U.S. greenhouse gas emissions.

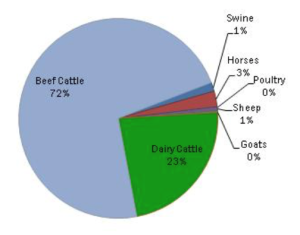

EPA data from 2007 shows the following distribution of sources of enteric fermentation among farting farm animals.

Source: EPA, 2007

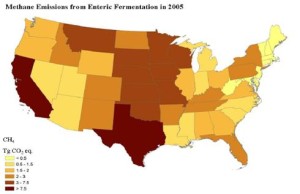

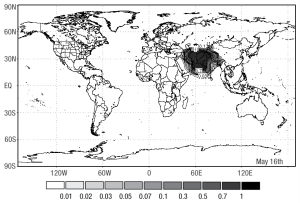

So it’s clear that cattle are the culprits. By state, the distribution of methane production from enteric fermentation is shown in the following map.

Source: U.S. Department of Agriculture, 2005

On this map, California and Texas appear to be the largest generators of methane from ruminants. More recent data on the cattle population in each state as of 1 January 2015 is available at the following link:

http://www.cattlenetwork.com/advice-and-tips/cowcalf-producer/cattle-inventory-ranking-all-50-states

Here, the top five states based on cattle population are: (1) Texas @ 11.8 million, (2) Nebraska @ 6.3 million, (3) Kansas @ 6.0 million, (4) California @ 5.2 million, and (5) Oklahoma @ 4.6 million. Total U.S. population of cattle and calves is about 89.5 million.

This brings us back to California’s new law.

The problem statement from the California legislative perspective:

The state has the power to do this, as summarized in the preamble in SB 1383:

“The California Global Warming Solutions Act of 2006 designates the State Air Resources Board as the state agency charged with monitoring and regulating sources of emissions of greenhouse gases. The state board is required to approve a statewide greenhouse gas emissions limit equivalent to the statewide greenhouse gas emissions level in 1990 to be achieved by 2020. The state board is also required to complete a comprehensive strategy to reduce emissions of short-lived climate pollutants, as defined, in the state.”

Particular requirements that apply to the state’s bovine population are the following:

“Work with stakeholders to identify and address technical, market, regulatory, and other challenges and barriers to the development of dairy methane emissions reduction projects.” [39730.7(b)(2)(A)]

“Conduct or consider livestock and dairy operation research on dairy methane emissions reduction projects, including, but not limited to, scrape manure management systems, solids separation systems, and enteric fermentation.” [39730.7(b)(2)(C)(i)]

“Enteric emissions reductions shall be achieved only through incentive-based mechanisms until the state board, in consultation with the department, determines that a cost-effective, considering the impact on animal productivity, and scientifically proven method of reducing enteric emissions is available and that adoption of the enteric emissions reduction method would not damage animal health, public health, or consumer acceptance. Voluntary enteric emissions reductions may be used toward satisfying the goals of this chapter.” [39730.7(f)]

By 1 July 2020, the State Air Resources Board is required to assess the progress made by the dairy and livestock sector in achieving the goals for methane reduction. If this assessment shows that progress has not been made because of insufficient funding, technical or market barriers, then the state has the leeway to reduce the goals for methane reduction.

Possible technical solution

As shown in a chart above, several different industries contribute to methane production. One way to achieve most of California’s 40% reduction goal in the next 14 years would be to simply move all cattle and dairy cow businesses out of state and clean up the old manure management sites. While this actually may happen for economic reasons, let’s look at some technical alternatives.

- Breed cows that generate less methane

- Develop new feed for cows, which could help cows better digest their food and produce less methane.

- Put a plug in it

- Collect the methane from the cows

Any type of genetically modified organism (GMO) doesn’t go over well in California, so I think a GMO reduced methane producing cow is simply a non-starter.

A cow’s diet consists primarily of carbohydrates, usually from parts of plants that are not suitable as food for humans and many other animals. The first step in the ruminant digestion process is fermentation in the rumen, and this is the source of methane gas. The only option is to put cows on a low-carb diet. That would be impossible to implement for cows that are allowed to graze in the field.

Based on a cow’s methane production rate, putting a cork in it is a very short-term solution, at best, and you’ll probably irritate the cow. However, some humorists find this to be an option worthy of further examination.

Source: Taint

Source: Taint

That leaves us with the technical option of collecting the methane from the cows. Two basic options exist: collect the methane from the rumen, or from the other end of the cow. I was a bit surprised that several examples of methane collecting “backpacks” have been developed for cows. Unanimously, and much to the relief of the researchers, the international choice for methane collection has been from the rumen.

So, what does a fashionable, environmentally-friendly cow with a methane-collecting backpack look like?

Argentina’s INTA took first place with the sleek blue model shown below.

Another INTA example was larger and more colorful, but considerably less stylish. Even if this INTA experiment fails to yield a practical solution for collecting methane from cows, it clearly demonstrates that cows have absolutely no self-esteem.

In Australia, these cows are wearing smaller backpacks just to measure their emissions.

Time will tell if methane collection devices become de rigueur for cattle and dairy cows in California or anywhere else in the world. While this could spawn a whole new industry for tending those inflating collection devices and making productive use of the collected methane, I can’t imagine that the California economy could actually support the cost for managing such devices for all of the state’s 5.2 million cattle and dairy cows.

Of all the things we need in California, managing methane from cow farts (oops, I meant to say enteric fermentation) probably is at the very bottom of most people’s lists, unless they’re on the State Air Resources Board.

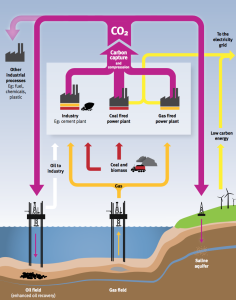

20 February 2019 Update: “Negative Emissions Technology” (NET) may be an appropriate solution to methane production from ruminent animals

In my 19 February 2019 post, “Converting Carbon Dioxide into Useful Products,” I discussed the use of NETs as a means to reduce atmospheric carbon dioxide by deploying carbon dioxide removal “factories” that can be sited independently from the sources of carbon dioxide generation. An appropriately scaled and sited NET could mitigate the effects of methane released to the atmosphere from all ruminent animals in a selected region, with the added benefit of not interfering directly with the animals. You can read my post here:

https://lynceans.org/all-posts/converting-carbon-dioxide-into-useful-products/