Peter Lobner

On 25 May 1961, President John F. Kennedy made an important speech to a joint session of Congress in which he stated:

“I believe that this nation should commit itself to achieving the goal, before this decade is out, of landing a man on the moon and returning him safely to the Earth.”

This was a very bold statement considering the state-of-the-art of U.S. aerospace technology in mid-1961. Yuri Gagarin became the first man to orbit the Earth on 12 April 1961 in a Soviet Vostok spacecraft and Alan Shepard completed the first Project Mercury suborbital flight on 5 May 1961. No American had yet flown in orbit. It wasn’t until 20 February 1962 that the first Project Mercury capsule flew into Earth orbit with astronaut John Glenn. The Soviets had hit the Moon with Luna 2 and returned photos from the backside of the moon with Luna 3. The U.S had only made one distant lunar flyby with the tiny Pioneer 4 spacecraft. The Apollo manned lunar program was underway, but still in the concept definition phase. The first U.S. heavy booster rocket designed to support the Apollo program, the Saturn 1, didn’t fly until 27 October 1961.

President Kennedy concluded this part of his 25 May 1961 speech with the following admonition:

“This decision (to proceed with the manned lunar program) demands a major national commitment of scientific and technical manpower, materiel and facilities, and the possibility of their diversion from other important activities where they are already thinly spread. It means a degree of dedication, organization and discipline, which have not always characterized our research and development efforts. It means we cannot afford undue work stoppages, inflated costs of material or talent, wasteful interagency rivalries, or a high turnover of key personnel.

New objectives and new money cannot solve these problems. They could in fact, aggravate them further–unless every scientist, every engineer, every serviceman, every technician, contractor, and civil servant gives his personal pledge that this nation will move forward, with the full speed of freedom, in the exciting adventure of space.”

This was the spirit that lead to the great success of the Apollo program, which landed the first men on the Moon, astronauts Neil Armstrong and Ed Aldrin, on 20 July 1969; a little more than 8 years after President Kennedy’s speech.

NASA’s plans for manned Mars exploration

By 1964, exciting concepts for manned Mars exploration vehicles were being developed under National Aeronautics and Space Administration (NASA) contract by several firms. One example is a Mars lander design shown below from Aeronutronic (then a division of Philco Corp). A Mars Excursion Module (MEM) would descend to the surface of Mars from a larger Mars Mission Module (MMM) that remained in orbit. The MEM was designed for landing a crew of three on Mars, spending 40 days on the Martian surface, and then returning the crew back to Mars orbit and rendezvousing with the MMM for the journey back to Earth.

Source: NASA / Aviation Week 24Feb64

Source: NASA / Aviation Week 24Feb64

This and other concepts developed in the 1960s are described in detail in Chapters 3 – 5 of NASA’s Monograph in Aerospace History #21, “Humans to Mars – Fifty Years of Mission Planning, 1950 – 2000,” which you can download at the following link:

http://www.nss.org/settlement/mars/2001-HumansToMars-FiftyYearsOfMissionPlanning.pdf

In the 1960’s the U.S. nuclear thermal rocket development program led to the development of the very promising NERVA nuclear engine for use in an upper stage or an interplanetary spacecraft. NASA and the Space Nuclear Propulsion Office (SNPO) felt that tests had “confirmed that a nuclear rocket engine was suitable for space flight application.”

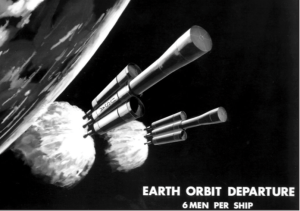

In 1969, Marshall Space Flight Director Wernher von Braun propose sending 12 men to Mars aboard two rockets, each propelled by three NERVA engines. This spacecraft would have measured 270 feet long and 100 feet wide across the three nuclear engine modules, with a mass of 800 tons, including 600 tons of liquid hydrogen propellant for the NERVA engines. The two outboard nuclear engine modules only would be used to inject the spacecraft onto its trans-Mars trajectory, after which they would separate from the spacecraft. The central nuclear engine module would continue with the manned spacecraft and be used to enter and leave Mars orbit and enter Earth orbit at the end of the mission. The mission would launch in November 1981 and land on Mars in August 1982.

NERVA-powered Mars spacecraft. Source: NASA / Monograph #21

NERVA-powered Mars spacecraft. Source: NASA / Monograph #21

NASA’s momentum for conducting a manned Mars mission by the 1980s was short-lived. Development of the super heavy lift Nova booster, which was intended to place about 250 tons to low Earth orbit (LEO), was never funded. Congress reduced NASA’s funding in the FY-69 budget, resulting in NASA ending production of the Saturn 5 heavy-lift booster rocket (about 100 tons to LEO) and cancelling Apollo missions after Apollo 17. This left NASA without the heavy-lift booster rocket needed to carry NERVA and/or assembled interplanetary spacecraft into orbit.

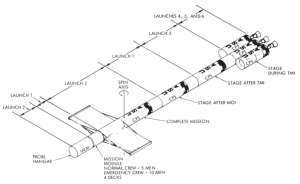

NASA persevered with chemical rocket powered Mars mission concepts until 1971. The final NASA concept vehicle from that era, looking much like von Braun’s 1969 nuclear-powered spacecraft, is shown below.

Source: NASA / Monograph #21

The 24-foot diameter modules would have required six Shuttle-derived launch vehicles (essentially the large center tank and the strap-in solid boosters, without the Space Shuttle itself) to deliver the various modules for assembly in orbit.

While no longer a factor in Mars mission planning, the nuclear rocket program was canceled in 1972. You can read a history of the U.S. nuclear thermal rocket program at the following links:

http://www.lanl.gov/science/NSS/issue1_2011/story4full.shtml

and,

http://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19910017902.pdf

NASA budget realities in subsequent years, dictated largely by the cost of Space Shuttle and International Space Station development and operation, reduced NASA’s manned Mars efforts to a series of design studies, as described in the Monograph #21.

Science Applications International Corporation (SAIC) conducted manned Mars mission studies for NASA in 1984 and 1987. The latter mission design study was conducted in collaboration with astronaut Sally Ride’s August 1987 report, Leadership and America’s Future in Space. You can read this report at the following link.

http://history.nasa.gov/riderep/cover.htm

Details on the 1987 SAIC mission study are included in Chapter 8 of the Monograph #21. SAIC’s mission concept employed two chemically-fueled Mars spacecraft in “split/sprint” roles. An automated cargo-carrying spacecraft would be first to depart Earth. It would fly an energy-saving trajectory and enter Mars orbit carrying the fuel needed by the future manned spacecraft for its return to Earth. After the cargo spacecraft was in Mars orbit, the manned spacecraft would be launched on a faster “sprint” trajectory, taking about six months to get to Mars. With one month allocated for exploration of the Martian surface, total mission time would be on the order of 12 – 14 months.

President Obama’s FY-11 budget redirected NASA’s focus away from manned missions to the Moon and Mars. The result is that there are no current programs with near-term goals to establish a continuous U.S. presence on the Moon or conduct the first manned mission to Mars. Instead, NASA is engaged in developing hardware that will be used initially for a relatively near-Earth (but further out than astronauts have gone before) “asteroid re-direct mission.” NASA’s current vision for getting to Mars is summarized below.

- In the 2020s, NASA will send astronauts on a year-long mission into (relatively near-Earth) deep space, verifying spacecraft habitation and testing our readiness for a Mars mission.

- In the 2030s, NASA will send astronauts first to low-Mars orbit. This phase will test the entry, descent and landing techniques needed to get to the Martian surface and study what’s needed for in-situ resource utilization.

- Eventually, NASA will land humans on Mars.

You can read NASA’s Journey to Mars Overview at the following link:

https://www.nasa.gov/content/journey-to-mars-overview

NASA’s current plans for getting to Mars don’t really sound like much of a plan to me. Think back to President Kennedy’s speech that outlined the national commitment needed to accomplish a lunar landing within the decade of the 1960s. There is no real sense of timeliness in NASA plans for getting to Mars.

Thinking back to the title of NASA’s Monograph #21, “Humans to Mars – Fifty Years of Mission Planning, 1950 – 2000,” I’d say that NASA is quite good at manned Mars mission planning, but woefully short on execution. I recognize that NASA’s ability to execute anything is driven by its budget. However, in 1969, Wernher von Braun thought the U.S. was about 12 years from being able to launch a nuclear-powered manned Mars mission in 1981. Now it seems we’re almost 20 years away, with no real concept for the spacecraft that will get our astronauts there and back.

Commercial plans for manned Mars exploration

Fortunately, the U.S. commercial aerospace sector seems more committed to conducting manned Mars missions than NASA. The leading U.S. contenders are Bigelow Aerospace and SpaceX. Let’s look at their plans.

Bigelow Aerospace

Bigelow is developing expandable structures that can be used to house various types of occupied spaces on manned Earth orbital platforms or on spacecraft destined for lunar orbital missions or long interplanetary missions. Versions of these expandable structures also can be used for habitats on the surface of the Moon, Mars, or elsewhere.

The first operational use of this type of expandable structure in space occurred on 26 May 2016, when the BEAM (Bigelow Expandable Activity Module) was deployed to its full size on the International Space Station (ISS). BEAM was expanded by air pressure from the ISS.

BEAM installed in the ISS. Source: Bigelow Aerospace

BEAM installed in the ISS. Source: Bigelow Aerospace

You can view a NASA time-lapse video of BEAM deployment at the following link:

https://www.youtube.com/watch?v=QxzCCrj5ssE

A large, complex space vehicle can be built with a combination of relatively conventional structures and Bigelow inflatable modules, as shown in the following concept drawing.

Source: Bigelow Aerospace

Source: Bigelow Aerospace

A 2011 NASA concept named Nautilus-X, also making extensive use of inflatable structures, is shown in the following concept drawing. Nautilus is an acronym for Non-Atmospheric Universal Transport Intended for Lengthy United States Exploration.

Source: NASA / NASA Technology Applications Assessment Team

SpaceX

SpaceX announced that it plans to send its first Red Dragon capsule to Mars in 2018 to demonstrate the ability to land heavy loads using a combination of aero braking with the capsule’s ablative heat shield and propulsive braking using rocket engines for the final phase of landing.

Source: SpaceX

Source: SpaceX

More details on the Red Dragon spacecraft are in a 2012 paper by Karcs, J. et al., entitled, “Red Dragon: Low-cost Access to the Surface of Mars Using Commercial Capabilities,” which you’ll find at the following link:

https://www.nas.nasa.gov/assets/pdf/staff/Aftosmis_M_RED_DRAGON_Low-Cost_Access_to_the_Surface_of_Mars_Using_Commercial_Capabilities.pdf

NASA is collaborating with SpaceX to gain experience with this landing technique, which NASA expects to employ in its own future Mars missions.

On 27 September 2016, SpaceX CEO Elon Musk unveiled his grand vision for colonizing Mars at the 67th International Astronautical Congress in Guadalajara, Mexico. You’ll find an excellent summary in the 29 September 2016 article by Dave Mosher entitled, “Elon Musk’s complete, sweeping vision on colonizing Mars to save humanity,” which you can read on the Business Insider website at the following link:

http://www.businessinsider.com/elon-musk-mars-speech-transcript-2016-9

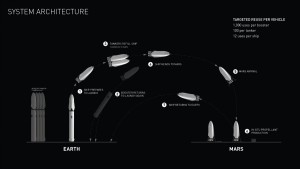

The system architecture for the SpaceX colonizing flights is shown in the following diagram. Significant features include:

- 100 passengers on a one-way trip to Mars

- Booster and spacecraft are reusable

- No spacecraft assembly in orbit required.

- The manned interplanetary vehicle is fueled with methane in Earth orbit from a tanker spacecraft.

- The entire manned interplanetary vehicle lands on Mars. There is no part of the vehicle left orbiting Mars.

- The 100 passengers disembark to colonize Mars

- Methane fuel for the return voyage to Earth is manufactured on the surface of Mars.

- The spacecraft returns to Earth for reuse on another mission.

- Price per person for Mars colonists could be in the $100,000 to $200,000 range.

The Mars launcher for this mission would have a gross lift-off mass of 10,500 tons; 3.5 times the mass of NASA’s Saturn 5 booster for the Apollo Moon landing program.

Source: SpaceX

Source: SpaceX

Terraforming Mars

Colonizing Mars will require terraforming to transform the planet so it can sustain human life. Terraforming the hostile environment of another planet has never been done before. While there are theories about how to accomplish Martian terraforming, there currently is no clear roadmap. However, there is a new board game named, “Terraforming Mars,” that will test your skills at using limited resources wisely to terraform Mars.

Nate Anderson provides a detailed introduction to this board game in his 1 October 2016 article entitled, “Terraforming Mars review: Turn the ‘Red Planet’ green with this amazing board game,” which you can read at the following link:

http://arstechnica.com/gaming/2016/10/terraforming-mars-review/?utm_source=howtogeek&utm_medium=email&utm_campaign=newsletter

Source: Stronghold Games

Source: Stronghold Games Source: Nate Anderson / arsTECHNICA

Source: Nate Anderson / arsTECHNICA

Nate Anderson described the game as follows:

“In Terraforming Mars, you play one of several competing corporations seeking to terraform the Red Planet into a livable—indeed, hospitable—place filled with cows, dogs, fish, lichen, bacteria, grasslands, atmosphere, and oceans. That goal is achieved when three things happen: atmospheric oxygen rises to 14 percent, planetary temperature rises to 8°C, and all nine of the game’s ocean tiles are placed.

Real science rests behind each of these numbers. The ocean tiles each represent one percent coverage of the Martian surface; once nine percent of the planet is covered with water, Mars should develop its own sustainable hydrologic cycle. An atmosphere of 14 percent oxygen is breathable by humans (though it feels like a 3,000 m elevation on Earth). And at 8°C, water will remain liquid in the Martian equatorial zone.

Once all three milestones have been achieved, Mars has been successfully terraformed, the game ends, and scores are calculated.”

The players are competing corporations, each with limited resources. The game play evolves based how each player (corporation) chooses to spend their resources to build their terraforming engines (constrained by some rules of precedence), and the opportunities dealt to them in each round.

You can buy the game Terraforming Mars on Amazon.

So, before you sign up with SpaceX to become a Martian colonist, practice your skills at terraforming Mars. You’ll be in high demand as an expert terraformer when you get to Mars on a SpaceX colonist ship in the late 2020s.